Unsteady Aerodynamics Simulation of Frontier Region

JAXA Supercomputer System Annual Report April 2016-March 2017

Report Number: R16E0013

- Responsible Representative: Yuichi Matsuo(Aeronautical Technology Directorate, Numerical Simulation Research Unit)

- Contact Information: Atsushi Hashimoto(hashimoto.atsushi@jaxa.jp)

- Members: Takashi Ishida, Yuichi Matsuo, Atsushi Hashimoto, Kenji Hayashi, Kuniyuki Takekawa, Takashi Aoyama, Takahiro Yamamoto

- Subject Category: Aviation(Aircraft)

Abstract

The objective of this study is to realize CFD that can be used in the entire flight envelope by investigating precise CFD technologies that can be applied to unsteady phenomena, such as aerodynamic buffeting and flow separation.

Goal

Please refer 'Unsteady CFD technologies for the entire flight envelope | Numerical simulation technology | Aeronautical Technology Directorate'.

Objective

Please refer 'Unsteady CFD technologies for the entire flight envelope | Numerical simulation technology | Aeronautical Technology Directorate'.

References and Links

Please refer 'Unsteady CFD technologies for the entire flight envelope | Numerical simulation technology | Aeronautical Technology Directorate'.

Use of the Supercomputer

FaSTAR has been developed by JAXA and tuned for JSS2. Its computational speed is the highest level in the world. We extend the capability to unsteady flow analysis and work toward its practical use.

Necessity of the Supercomputer

Unsteady simulation is more than 1000 times cost compared with steady simulation. We need a supercomputer within a reasonable time.

Achievements of the Year

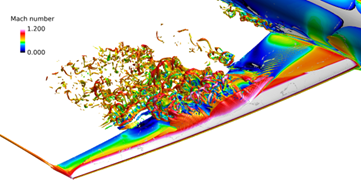

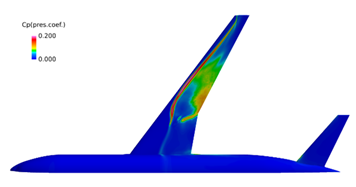

The buffet over NASA-CRM wing-body configuration is computed with the Zonal-DES method. The Mach number is 0.85, the Reynolds number is 1.5×106, and the angle of attack is 4.87°. The grid is generated with HexaGrid. The total number of grid is 84 million cells. The obtained result is shown in Fig. 1. The vortices are visualized with Q criteria to see the separated flow. The flow is separated behind the shock wave and the free shear layer is fluctuated. Figure 2 shows the RMS of surface pressure. The pressure fluctuation is caused by the shock wave oscillation, and this phenomenon is similar to the experiment. But we do not obtain the quantitative agreement with the experiment. We continue to do research to improve the prediction accuracy.

Publications

Non peer-reviewed articles

1) Takashi Ishida, Atsushi Hashimoto, Yuya Ohmichi, Takashi Aoyama, and Kuniyuki Takekawa. 'Transonic Buffet Simulation over NASA-CRM by Unsteady-FaSTAR Code', 55th AIAA Aerospace Sciences Meeting, AIAA SciTech Forum, (AIAA 2017-0494)

2) Yasushi Ito, Mitsuhiro Murayama, Atsushi Hashimoto, Takashi Ishida, Kazuomi Yamamoto, Takashi Aoyama, Kentaro Tanaka, Kenji Hayashi, Keiji Ueshima, Taku Nagata, Yosuke Ueno, and Akio Ochi. 'TAS Code, FaSTAR and Cflow Results for the Sixth Drag Prediction Workshop', 55th AIAA Aerospace Sciences Meeting, AIAA SciTech Forum, (AIAA 2017-0959)

3) Yuya Ohmichi and Atsushi Hashimoto. 'Numerical Investigation of Transonic Buffet on a Three-Dimensional Wing using Incremental Mode Decomposition', 55th AIAA Aerospace Sciences Meeting, AIAA SciTech Forum, (AIAA 2017-1436)

Presentations

1) Atsushi Hashimoto, Takashi Ishida, Takashi Aoyama, Kenji Hayashi, Kuniyuki Takekawa

Fast Parallel Computing with Unstructured Grid Flow Solver, 28th International Conference on Parallel Computational Fluid Dynamics, Parallel CFD2016, 2016/5/9-12, Kobe, Japan

2) Atsushi Hashimoto, Takashi Ishida, Takashi Aoyama, Kenji Hayashi, Keiji Ueshima, FaSTAR Results of Sixth Drag Prediction Workshop, 6th AIAA CFD Drag Prediction Workshop (DPW6), Washington, D.C. USA, 16-17 June 2016

3) Kenji Hayashi, Takashi Ishida, Atsushi Hashimoto, Takashi Aoyama, Comparison of HexaGrid and BOXFUN Through Computational Grids and CFD Results

Computational Information

- Parallelization Methods: Process Parallelization

- Process Parallelization Methods: MPI

- Thread Parallelization Methods: n/a

- Number of Processes: 512

- Number of Threads per Process: 1

- Number of Nodes Used: 16

- Elapsed Time per Case (Hours): 720

- Number of Cases: 10

Resources Used

Total Amount of Virtual Cost(Yen): 30,641,773

Breakdown List by Resources

| System Name | Amount of Core Time(core x hours) | Virtual Cost(Yen) |

|---|---|---|

| SORA-MA | 18,036,768.03 | 29,417,188 |

| SORA-PP | 95,735.30 | 817,387 |

| SORA-LM | 2,839.61 | 63,891 |

| SORA-TPP | 0.00 | 0 |

| File System Name | Storage assigned(GiB) | Virtual Cost(Yen) |

|---|---|---|

| /home | 5,280.62 | 49,812 |

| /data | 23,671.71 | 223,296 |

| /ltmp | 4,581.20 | 43,214 |

| Archiving System Name | Storage used(TiB) | Virtual Cost(Yen) |

|---|---|---|

| J-SPACE | 8.74 | 26,982 |

Note: Virtual Cost=amount of cost, using the unit price list of JAXA Facility Utilization program(2016)

JAXA Supercomputer System Annual Report April 2016-March 2017