Post-K Priority Issue 8D: Research and development of core technology to innovate aircraft design and operation

JAXA Supercomputer System Annual Report April 2017-March 2018

Report Number: R17ECMP06

Subject Category: Competitive Funding

- Responsible Representative: Yuko Inatomi, Institute of Space and Astronautical Science, Department of Interdisciplinary Space Science

- Contact Information: Ryoji Takaki ryo@isas.jaxa.jp

- Members: Ryoji Takaki, Taku Nonomura, Seiji Tsutsumi, Yuma Fukushima, Soshi Kawai, Yuki Kawaguchi, Ikuo Miyoshi, Satoshi Sekimoto, Shibata Hisaichi, Hiroshi Koizumi, Yuichi Kuya, Tomohide Inari, Ryota Hirashima

Abstract

We develop a high-speed/high-precision computational program using a quasi-first principle method, which can faithfully reproduce the actual flight environment to understand the true nature of fluid phenomena. Specifically, we develop a high-precision compressible flow solver with geometric wall models and LES (Large Eddy Simulation) wall models based on hierarchical, orthogonal and equally spaced structured grids.

Reference URL

N/A

Reasons for using JSS2

We need large computer like JSS2 because our calculations must be large scale computations. Moreover, JSS2 has a similar architecture to the our target computer called Post-K.

Achievements of the Year

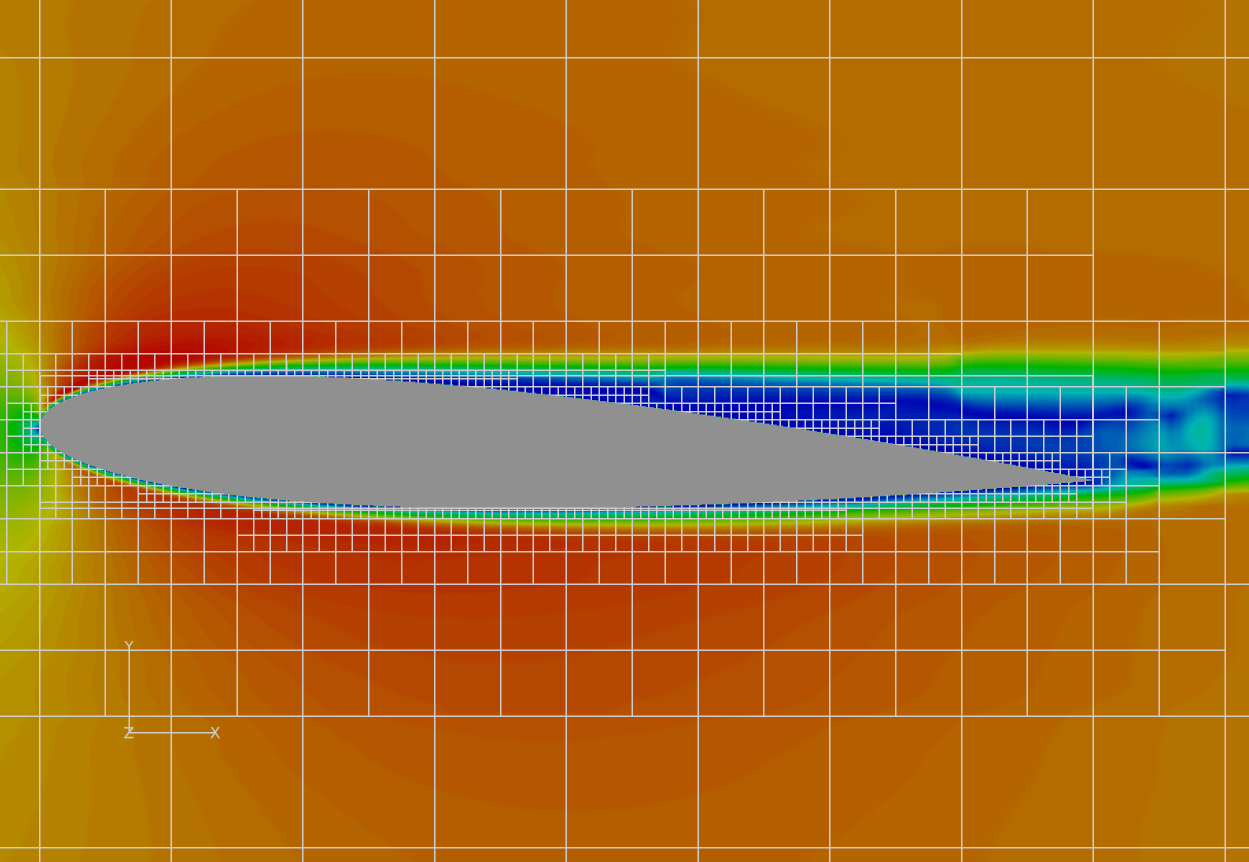

We advance the development of FFV-HC-ACE: a compressible Navier-Stokes equation solver using hierarchical, orthogonal and equally spaced structured grid. Following new functions are added to the program; calculation function of convective and viscous fluxes using image point (IP) and data communication function between oblique blocks. A flow around a NACA0012 2D airfoil is calculated as a preliminary validation of the program. Fig. 1 shows the Mach number distributions around the airfoil and block boundaries. Mach number is 0.3, Reynolds number is 10,000 and attack angle is 3 degree.

Fig.1: Mach number distributions around NACA0012 (Mach number is 0.3, Reynolds number is 10,000 and attack angle is 3 degree)

Publications

■ Presentations

1) H. Shibata, M. Sato, Y. Fukushima, S. Tsutsumi, T. Nonomura, S. Kawai and R. Takaki, Development of a compressible flow solver using hierarchical Cartesian grids - toward the age of Exa-scale supercomputers-, 29th international conference on parallel computational fluid dynamics.

2) H. Shibata, M. Sato, S. Tsutsumi, Y. Fukushima, T. Nonomura, S. Kawai and R. Takaki, Investigation of flux based wall boundary condition for hierarchical cartesian grid method, 49th Fluid Dynamics Conference/35th Aerospace Numerical Simulation Symposium.

3) H. Shibata, Y. Fukushima, S. Tsutsumi, Y. Kuya, S. Kawai and R. Takaki, Assessment of wall boundary conditions for a cartesian grid method, 31th Computational Fluid Dynamics Symposium.

4) R. Takaki, Characterization of PRIMEHPC FX100 by CFD solver using structured grid method, 1st Workshop of HPC MONOZUKURI on Post-K Priority Issue 6 6 and 8.

5) R. Takaki, A challenge to analysis of real complicated configurations of aircraft -Sub-issue D-, 1st Workshop of HPC MONOZUKURI on Post-K Priority Issue 6 6 and 8.

6) R.Takaki, Characterization of many core CPU by CFD programs, 49th Fluid Dynamics Conference/35th Aerospace Numerical Simulation Symposium.

7) R. Takaki, H. Shibata, S. Kawai, Y. Fukushima, S. Tsutsumi and Y. Kuya, A challenge to analysis of real flight Reynolds number flows by Post-K computer, 55th Aircraft Symposium.

8) R. Takaki, High performance computing by many-core based CPU, 3rd Symposium on Space Science Informatics.

9) R. Takaki, Toward the achievement of the aerodynamic characteristic evaluation for real configurations and real flight environments of aircraft, 3rd Symposium on Post-K computer Priority Issue 8.

Usage of JSS2

Computational Information

- Process Parallelization Methods: MPI

- Thread Parallelization Methods: OpenMP

- Number of Processes: 2 - 20

- Elapsed Time per Case: 20.00 hours

Resources Used

Fraction of Usage in Total Resources*1(%): 0.76

Details

Please refer to System Configuration of JSS2 for the system configuration and major specifications of JSS2.

| System Name | Amount of Core Time(core x hours) | Fraction of Usage*2(%) |

|---|---|---|

| SORA-MA | 6,186,602.05 | 0.81 |

| SORA-PP | 759.84 | 0.01 |

| SORA-LM | 0.00 | 0.00 |

| SORA-TPP | 0.00 | 0.00 |

| File System Name | Storage Assigned(GiB) | Fraction of Usage*2(%) |

|---|---|---|

| /home | 3,310.17 | 2.29 |

| /data | 21,116.11 | 0.39 |

| /ltmp | 10,782.88 | 0.81 |

| Archiver Name | Storage Used(TiB) | Fraction of Usage*2(%) |

|---|---|---|

| J-SPACE | 38.98 | 1.68 |

*1: Fraction of Usage in Total Resources: Weighted average of three resource types (Computing, File System, and Archiver).

*2: Fraction of Usage:Percentage of usage relative to each resource used in one year.

JAXA Supercomputer System Annual Report April 2017-March 2018