Research of Tropical Rainfall Measuring Mission (TRMM)/ Precipitation Radar (PR)

JAXA Supercomputer System Annual Report April 2016-March 2017

Report Number: R16E0092

- Responsible Representative: Teruyuki Nakajima(Earth Observation Research Center)

- Contact Information: Takuji Kubota(kubota.takuji@jaxa.jp)

- Members: Tomohiko Higashiuwatoko, Naofumi Yoshida, Yoriko Arai, Shinji Ohwada, Hideaki Hase, Yukinori Arima, Misao Yamamoto, Kazuhiro Sakamoto, Tomoko Tajima

- Subject Category: Space(Satellite utilization)

Abstract

We performed the experimentations for the version upgrade and production of the long-term dataset using the GSMaP algorithm (V4). We also performed the long-term experimentations of PR V8 (PU1, PU2) and DPR V5 (L2Ku, L2Ka, L2DPR), which leads to helpful algorithm evaluations and improvements.

Goal

Production of global rainfall dataset derived from Tropical Rainfall Measuring Mission (TRMM).

Experimental production of the new version (V8) of the TRMM Precipitation Radar (PR).

Objective

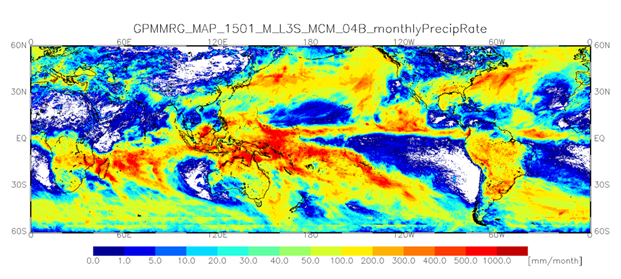

Calculation of the global rainfall map during a period from 2000 to 2010 using the GSMaP algorithm (V03 & V04).

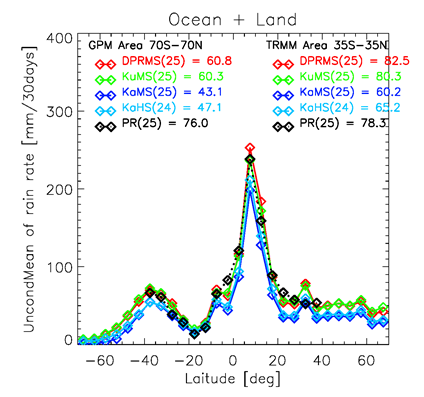

Checks of consistency between the TRMM/PR algorithm (V08) and the GPM/DPR algorithm (V05) on June 2014 when both TRMM and GPM missions were in operation.

References and Links

Please refer ‘JAXA Global Rainfall Watch by JAXA GSMaP‘.

Use of the Supercomputer

Calculation of the long-term data which consists of multiple satellites and sensors for the precipitation measurement with earlier computational times for algorithm evaluations, improvements, and long-term production.

Necessity of the Supercomputer

Because of the complexity of the processing algorithms, strict business progress management, emergency response, detailed user response by the operation side, etc. are required. When we do not use the JSS2, it can be said that reprocessing in a short period cannot be achieved.

Achievements of the Year

Test processing of global satellite mapping of precipitation (GSMaP V4) algorithm version up and long-period re-processing for release have been completed.

Long-period test processing of PR V8 (PU1, PU2) and DPR V5 (L2Ku, L2Ka, L2DPR) have been completed for evaluation, improvement and release. Total number of processed data is over 40 years.

Publications

Presentations

1) T. Kubota, R. Oki, M. Kachi, T. Masaki, Y. Kaneko, K. Furukawa, Y. N. Takayabu, T. Iguchi, and K. Nakamura, Current status of the Global Precipitation Measurement (GPM) mission in Japan, SPIE AP 2016, New Delhi, India, Apr. 2016 (invited).

2) T. Kubota, M. Kachi, R. Oki, K. Aonash, T. Ushio, S. Shige, Y. N. Takayabu, Global Satellite Mapping of Precipitation (GSMaP) Product in the GPM Era and its Validation, SPIE AP 2016, New Delhi, India, Apr. 2016.

3) T. Kubota, M. Kachi, R. Oki, Quasi-realtime version of the Global Satellite Mapping of Precipitation (GSMaP_NOW) over the Himawari-8 region, JpGU 2016, Makuhari, Chiba, Japan, May. 2016.

4) T. Kubota, R. Oki, M. Kachi, K. Furukawa, T. Masaki, Y. Kaneko, Y. N. Takayabu, T. Iguchi, and K. Nakamura, Current status of the Global Precipitation Measurement (GPM) mission in Japan, AOGS2016, Beijing, China, Aug. 2016.

5) T. Kubota, R. Oki, K. Furukawa, M. Kachi, T. Iguchi (NICT), and Y. N. Takayabu, Current status of the Global Precipitation Measurement (GPM) mission in Japan, 8th Workshop of International Precipitation Working Group, Oct. 2016.

6) T. Kubota, 2016: Global Satellite Mapping of Precipitation(GSMaP) overview, The 5th Global Precipitation Measurement (GPM) Asia Workshop on Precipitation Data Application Technique, Tokyo, Japan, Jan. 2016.

Computational Information

- Parallelization Methods: Serial

- Process Parallelization Methods: n/a

- Thread Parallelization Methods: n/a

- Number of Processes: 60, 75, 16

- Number of Threads per Process: 1

- Number of Nodes Used: 10, 25

- Elapsed Time per Case (Hours): 24, 48

- Number of Cases: 10, 30

Resources Used

Total Amount of Virtual Cost(Yen): 3,155,035

Breakdown List by Resources

| System Name | Amount of Core Time(core x hours) | Virtual Cost(Yen) |

|---|---|---|

| SORA-MA | 0.00 | 0 |

| SORA-PP | 242,077.44 | 2,066,857 |

| SORA-LM | 1,854.10 | 41,717 |

| SORA-TPP | 62,193.14 | 917,037 |

| File System Name | Storage assigned(GiB) | Virtual Cost(Yen) |

|---|---|---|

| /home | 63.58 | 599 |

| /data | 635.78 | 5,997 |

| /ltmp | 13,020.84 | 122,825 |

| Archiving System Name | Storage used(TiB) | Virtual Cost(Yen) |

|---|---|---|

| J-SPACE | 0.00 | 0 |

Note: Virtual Cost=amount of cost, using the unit price list of JAXA Facility Utilization program(2016)

JAXA Supercomputer System Annual Report April 2016-March 2017