Investigation on Fine-scale Scalar Mixing in High Reynolds Number Turbulent Jets

JAXA Supercomputer System Annual Report April 2016-March 2017

Report Number: R16E0065

- Responsible Representative: Yuichi Matsuo(Aeronautical Technology Directorate, Numerical Simulation Research Unit)

- Contact Information: Shingo Matsuyama(smatsu@chofu.jaxa.jp)

- Members: Shingo Matsuyama

- Subject Category: Basic Research(CFD,other )

Abstract

In this research, large scale direct numerical simulation of turbulent flow is performed on supercomputer to clarify the role of very small-scale turbulence in the mixing process of fuel and air. We try to clarify the role of fine-scale turbulence by analyzing the data obtained by simulations with varying the Reynolds number which is a parameter that governs the turbulence intensity.

Goal

In this study, DNS and high resolution implicit LES are performed for turbulent planar jets with scalar mixing to clarify the role of fine-scale turbulent scalar mixing under high Reynolds number condition. The result of this research leads to an understanding of the turbulent scalar mixing process occurring on a fine scale, which contributes to improvement of SGS modeling for scalar mixing in high Reynolds number turbulence.

Objective

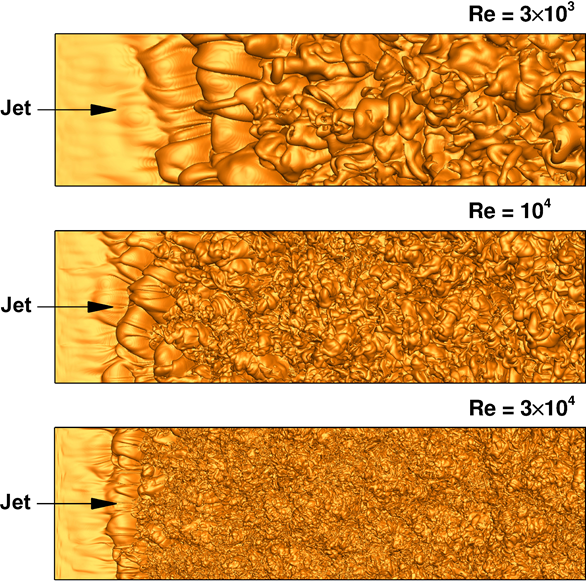

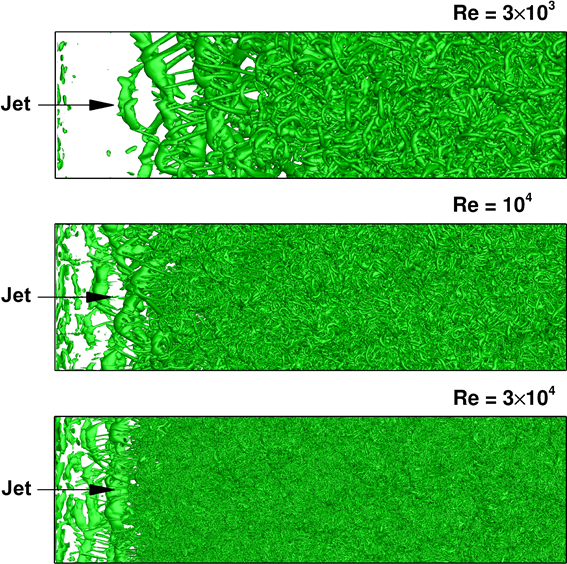

In this study, DNSs are performed for a planar turbulent jet with scalar mixing at Reynolds numbers from 3×103 to 3 × 104. By analyzing the results at Re = 3×103, 104, 3 × 104, we evaluate the Re-dependence of turbulent scalar mixing process. Furthermore, a threshold length scale below which a fine-scale turbulence does not affect the macro-scale mixing process of the jet is evaluated. For this purpose,sub-grid scale (SGS) scalar flux is evaluated by filtering the DNS data, and compared with the grid scale (GS) scalar flux. We evaluate the percentage of SGS flux relative to GS flux while varying the filter width and clarify the scale at which the contribution of SGS flux is negligibly small.

References and Links

Please refer ‘KAKEN — Research Projects | 高レイノルズ数乱流噴流における微細スケールスカラー混合過程の解明 (KAKENHI-PROJECT-15K05817)‘.

Use of the Supercomputer

We used supercomputer system to perform DNSs on 240 million and 1.3 billion grid points for Re = 104 and 3 × 104, respectively.

Necessity of the Supercomputer

In order to clarify the role of fine-scale turbulence in scalar mixing process, statistical data by DNS is required for high Reynolds number condition. For performing DNS under high Reynolds number condition of Re > 104, a numerical mesh of the order of one billion points is required. Such large-scale simulation can be executed only on a supercomputer, and therefore, supercomputer system is indispensable for carrying out this research.

Achievements of the Year

DNSs were performed with Re = 3 × 103, 104 and 3 × 104. For Re = 3 × 103 and 104, the influence on the turbulent analysis result was evaluated by varying the spatial accuracy with 3, 5, 7, 9-th order accuracy. DNS at Re = 3 × 104 was performed on 1.3 billion grid points with 9-th order spatial accuracy. It was confirmed by comparison with past experimental data and evaluation of Kolmogorov length scale that Re-dependence of planar jet is correctly reproduced by the present DNS.

Movie 1:Top view of iso-surfaces of scalar mass fraction for the DNS at Re = 3×104

Movie 2:Top view of iso-surfaces of positive Q criteria for the DNS at Re = 3×104

Publications

Peer-reviewed articles

1) S. Matsuyama, ‘Implicit Large-Eddy Simulation of Turbulent Planar Jet with Scalar Mixing,’ AIAA Journal(preparing forsubmission).

Computational Information

- Parallelization Methods: Hybrid Parallelization

- Process Parallelization Methods: MPI

- Thread Parallelization Methods: OpenMP,Automatic Parallelization

- Number of Processes: 286-924

- Number of Threads per Process: 4-8

- Number of Nodes Used: 72-143

- Elapsed Time per Case (Hours): 120-1000

- Number of Cases: 8

Resources Used

Total Amount of Virtual Cost(Yen): 48,418,440

Breakdown List by Resources

| System Name | Amount of Core Time(core x hours) | Virtual Cost(Yen) |

|---|---|---|

| SORA-MA | 29,960,762.44 | 48,381,309 |

| SORA-PP | 0.01 | 0 |

| SORA-LM | 0.00 | 0 |

| SORA-TPP | 0.00 | 0 |

| File System Name | Storage assigned(GiB) | Virtual Cost(Yen) |

|---|---|---|

| /home | 598.94 | 5,649 |

| /data | 2,849.03 | 26,874 |

| /ltmp | 488.28 | 4,605 |

| Archiving System Name | Storage used(TiB) | Virtual Cost(Yen) |

|---|---|---|

| J-SPACE | 0.00 | 0 |

Note: Virtual Cost=amount of cost, using the unit price list of JAXA Facility Utilization program(2016)

JAXA Supercomputer System Annual Report April 2016-March 2017